LoC Data Package Tutorial: Stereograph Card Collection#

This notebook will demonstrate basic usage of using Python for interacting with data packages from the Library of Congress via the Stereograph Card Images Data Package which is derived from the Library’s Stereograph Cards collection. We will:

Prerequisites#

In order to run this notebook, please follow the instructions listed in this directory’s README.

Output data package summary#

First, we will select Stereograph Card Images Data Package and output a summary of it’s contents

import io

import pandas as pd # for reading, manipulating, and displaying data

import requests

from helpers import get_file_stats

DATA_URL = 'https://data.labs.loc.gov/stereographs/' # Base URL of this data package

# Download the file manifest

file_manifest_url = f'{DATA_URL}manifest.json'

response = requests.get(file_manifest_url, timeout=120)

response_json = response.json()

files = [dict(zip(response_json["cols"], row)) for row in response_json["rows"]] # zip columns and rows

# Convert to Pandas DataFrame and show stats table

stats = get_file_stats(files)

pd.DataFrame(stats)

| FileType | Count | Size | |

|---|---|---|---|

| 0 | .jpg | 39,597 | 4.03GB |

Query the metadata in a data package#

Next we will download a data package’s metadata, print a summary of the items’ subject values, then filter by a particular subject.

All data packages have a metadata file in .json and .csv formats. Let’s load the data package’s metadata.json file:

metadata_url = f'{DATA_URL}metadata.json'

response = requests.get(metadata_url, timeout=300)

data = response.json()

print(f'Loaded metadata file with {len(data):,} entries.')

Loaded metadata file with 39,532 entries.

Next let’s convert to pandas DataFrame and print the available properties

df = pd.DataFrame(data)

print(', '.join(df.columns.to_list()))

access_restricted, aka, campaigns, contributor, coordinates, date, description, digitized, extract_timestamp, group, hassegments, id, image_url, index, language, latlong, location, location_str, locations, lonlat, mime_type, online_format, original_format, other_title, partof, reproductions, resources, shelf_id, site, subject, timestamp, title, unrestricted, url, item, related, dates, number, number_former_id, number_lccn, number_oclc, number_carrier_type, number_source_modified, type, location_city, location_country, location_state, location_county, manifest_id

Next print the top 20 most frequent Subjects in this dataset

# Since "subject" are a list, we must "explode" it so there's just one subject per row

# We convert to DataFrame so it displays as a table

df['subject'].explode().value_counts().iloc[:20].to_frame()

| subject | |

|---|---|

| stereographs | 37427 |

| photographic prints | 33217 |

| albumen prints | 3596 |

| new york (state) | 2367 |

| 2030 | |

| new york | 1906 |

| united states | 1618 |

| history | 1404 |

| italy | 1381 |

| civil war | 1281 |

| japan | 1216 |

| washington (d.c.) | 1077 |

| ( | 1040 |

| norway | 943 |

| louisiana purchase exposition | 856 |

| missouri | 849 |

| saint louis | 844 |

| saint louis, mo.) | 841 |

| switzerland | 819 |

| ireland | 722 |

Now we filter the results to only those items with subject “washington (d.c.)”

df_by_subject = df.explode('subject')

dc_set = df_by_subject[df_by_subject.subject == 'washington (d.c.)']

print(f'Found {dc_set.shape[0]:,} items with subject "washington (d.c.)"')

Found 1,077 items with subject "washington (d.c.)"

Download and display images#

First we will merge the metadata with the file manifest to link the file URL to the respective item.

df_files = pd.DataFrame(files)

dc_set_with_images = pd.merge(dc_set, df_files, left_on='id', right_on='item_id', how='inner')

print(f'Found {dc_set_with_images.shape[0]:,} dc items with image files')

Found 1,071 dc items with image files

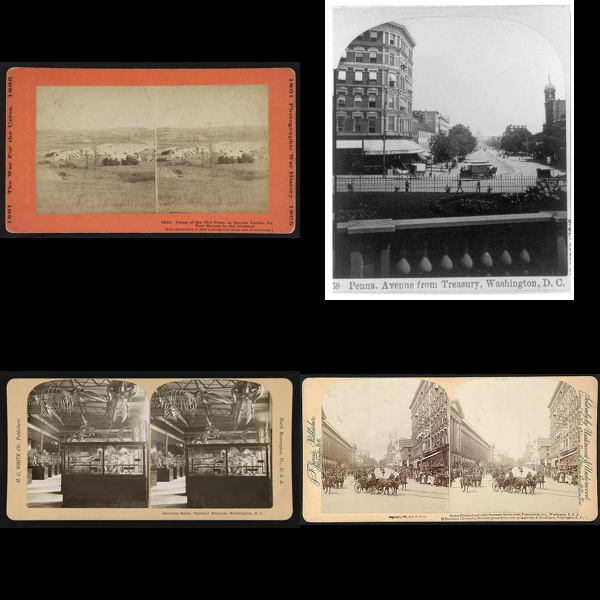

Finally we will download and display the first 4 images that have subject “washington (d.c.)”

import math

from IPython.display import display # for displaying images

from PIL import Image # for creating, reading, and manipulating images

count = 4

dc_set_with_images = dc_set_with_images.head(count).reset_index()

# Define image dimensions

image_w = 600

image_h = 600

cols = math.ceil(count / 2.0)

rows = math.ceil(count / 2.0)

cell_w = image_w / cols

cell_h = image_h / rows

# Create base image

base_image = Image.new("RGB", (image_w, image_h))

# Loop through image URLs

i = 0

for i, row in dc_set_with_images.iterrows():

file_url = f'https://{row["object_key"]}'

# Downoad the image to memory

response = requests.get(file_url, timeout=60)

image_filestream = io.BytesIO(response.content)

# And read the image data

im = Image.open(image_filestream)

# Resize it as a thumbnail

im.thumbnail((cell_w, cell_h))

tw, th = im.size

# Position it

col = i % cols

row = int(i / cols)

offset_x = int((cell_w - tw) * 0.5) if tw < cell_w else 0

offset_y = int((cell_h - th) * 0.5) if th < cell_h else 0

x = int(col * cell_w + offset_x)

y = int(row * cell_h + offset_y)

# Paste it

base_image.paste(im, (x, y))

i += 1

# Display the result

display(base_image)